Figure out how LLMs, Cloth, and GraphRAG can carry valuable man-made intelligence colleagues to the Website design enhancement field.

We are in an astonishing time where man-made intelligence progressions are changing proficient practices. Since its delivery, GPT-3 has "helped" experts in the SEM field with their substance related errands.

Be that as it may, the send off of ChatGPT in late 2022 ignited a development towards the formation of artificial intelligence colleagues. Toward the finish of 2023, OpenAI acquainted GPTs with consolidate guidelines, extra information, and errand execution.

The Promise Of GPTs

GPTs have made ready for the fantasy of an individual right hand that presently appears to be feasible. Conversational LLMs address an optimal type of human-machine interface.

To foster solid computer based intelligence colleagues, numerous issues should be addressed: reproducing thinking, keeping away from visualizations, and improving the ability to utilize outer instruments.

Our Journey To Developing An SEO Assistant

For the beyond couple of months, my two long-term colleagues, Guillaume and Thomas, and I have been dealing with this point. I'm introducing here the advancement cycle of our first prototypal Website optimization associate.

An SEO Assistant, Why?

We want to make an associate that will be prepared to do:

1: Producing content as indicated by briefs. ||2: Conveying industry information about Web optimization. It ought to have the option to answer with subtlety to questions like "Should there be numerous H1 labels per page?" or "Is TTFB a positioning element?"

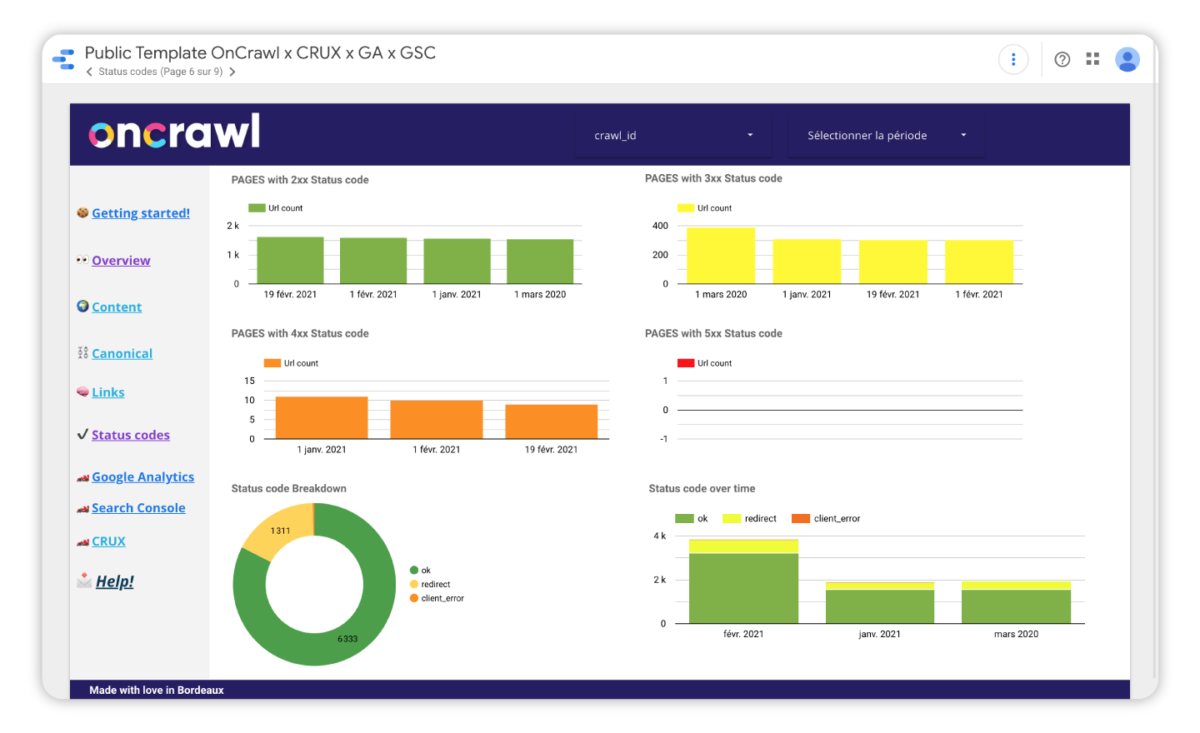

Cooperating with SaaS instruments. We as a whole use devices with graphical UIs of shifting intricacy. Having the option to utilize them through exchange works on their utilization. || 4: Arranging undertakings (e.g., dealing with a total publication schedule) and performing normal detailing errands, (for example, making dashboards).

For the principal task, LLMs are as of now very progressed as long as we can oblige them to utilize precise data. The last point about arranging is still to a great extent in the domain of sci-fi. Accordingly, we have zeroed in our work on coordinating information into the associate utilizing Cloth and GraphRAG approaches and outer APIs.

The RAG Approach

We will initially make an associate in light of the recovery expanded age (Cloth) approach. Cloth is a procedure that lessens a model's pipedreams by furnishing it with data from outer sources instead of its inner design (its preparation). Instinctively, it's like connecting with a splendid however amnesiac individual with admittance to a web crawler.

To construct this right hand, we will utilize a vector information base. There are numerous accessible: Redis, Elasticsearch, OpenSearch, Pinecone, Milvus, FAISS, and numerous others. We have picked the vector information base gave by Llama File to our model.

We likewise need a language model coordination (LMI) structure. This system expects to connect the LLM with the information bases (and records). Here as well, there are numerous choices: Lang Chain, Llama Record, Bundle, NeMo, Lang dock, Marvin, and so on. We involved Lang Chain and Llama Record for our undertaking. When you pick the product stack, the execution is genuinely direct.

We give archives that the structure changes into vectors that encode the substance. There are numerous specialized boundaries that can work on the outcomes. Be that as it may, specific inquiry systems like Llama Record perform very well locally.

For our evidence of-idea, we have given a couple of Search engine optimization books in French and a couple of pages from popular Website optimization sites. Utilizing Cloth takes into consideration less pipedreams and more complete responses.

You can find in the following picture an illustration of a response from a local LLM and from a similar LLM with our Cloth. We find in this model that the data given by the Cloth is somewhat more complete than the a single given by the LLM.

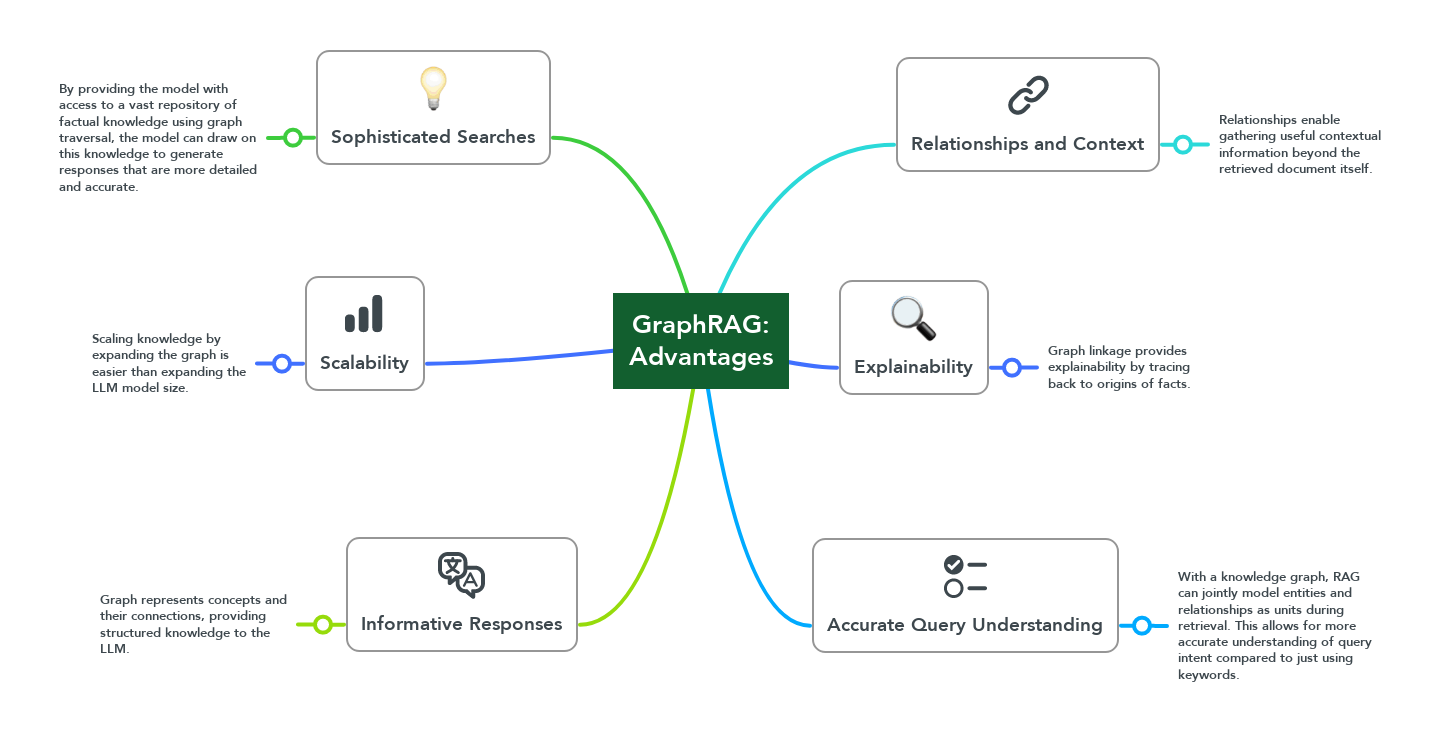

The Graph RAG Approach

Cloth models upgrade LLMs by coordinating outer reports, however they actually experience difficulty incorporating these sources and effectively removing the most pertinent data from an enormous corpus.

Assuming a response requires joining numerous snippets of data from a few records, the Cloth approach may not be successful. To tackle this issue, we preprocess printed data to extricate its fundamental construction, which conveys the semantics.

This implies making an information diagram, which is an information structure that encodes the connections between elements in a chart. This encoding is finished as a subject-connection object triple. In the model beneath, we have a portrayal of a few elements and their connections.

The substances portrayed in the chart are "Weave the otter" (named element), yet additionally "the waterway," "otter," "fur pet," and "fish." The connections are shown on the edges of the diagram.

The information is organized and shows that Weave the otter is an otter, that otters live in the stream, eat fish, and are fur pets. Information charts are exceptionally helpful on the grounds that they consider derivation: I can surmise from this diagram that Weave the otter is a fur pet!

Building an information chart is an undertaking that has been finished for quite a while with NLP procedures. Anyway LLMs work with the production of such charts thanks to their ability to handle text. In this manner, we will request that a LLM make the information chart.

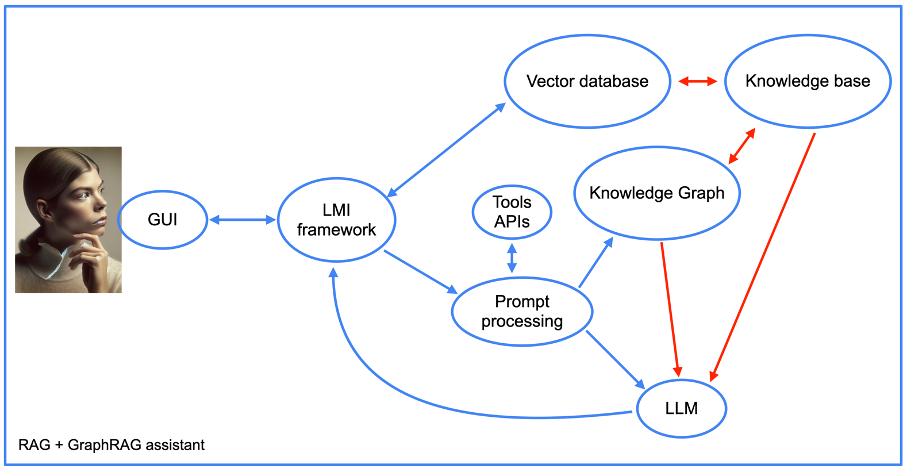

Obviously, the LMI structure effectively directs the LLM to play out this undertaking. We have utilized Llama Record for our task. Besides, the construction of our aide turns out to be more intricate while utilizing the graphRAG approach (see next picture).

We will return later to the incorporation of hardware APIs, yet for the rest, we see the components of a Cloth approach, alongside the information diagram. Note the presence of a "fast handling" part.

This is the piece of the associate's code that initially changes prompts into information base questions. It then, at that point, plays out the converse activity by making a comprehensible reaction from the information chart yields.

The accompanying picture shows the genuine code we utilized for the brief handling. You can find in this image that we utilized NebulaGraph, perhaps the earliest task to send the Chart Cloth approach.

One can see that the prompts are very straightforward. As a matter of fact, the greater part of the work is locally finished by the LLM. The more the LLM, the more the outcome, yet even open-source LLMs give quality outcomes. We have taken care of the information chart with similar data we utilized for the Cloth. Is the nature of the responses better? We should see on a similar model.

I let the peruser judge assuming the data given here is better compared to with the past methodologies, however I feel that it is more organized and complete. Notwithstanding, the disadvantage of Diagram Cloth is the idleness for getting a response (I'll talk again about this UX issue later).

Integrating SEO Tools Data

As of now, we have an associate that can compose and convey information all the more precisely. In any case, we likewise need to make the aide ready to convey information from Web optimization apparatuses. To arrive at that objective, we will utilize Lang Chain to collaborate with APIs utilizing normal language.

This is finished with capabilities that clarify for the LLM how to utilize a given Programming interface. For our undertaking, we utilized the Programming interface of the apparatus barbarities (To be completely honest: I'm the President of the organization that fosters the device.)

The picture above demonstrates the way that the partner can accumulate data about connecting measurements for a given URL. Then, at that point, we show at the system level (LangChain here) that the capability is accessible.

These three lines will set up a Lang Chain instrument from the capability above and introduce a talk for making the response in regards to the information. Note that the temperature is zero.

This implies that GPT-4 will yield direct responses with no inventiveness, which is better for conveying information from apparatuses. Once more, the LLM does a large portion of the work here: it changes the regular language question into a Programming interface solicitation and afterward gets back to normal language from the Programming interface yield.

Frequently Asked Questions!

What is a knowledge graph in LLM?

Suggested for you. Information Charts - The Force of Diagram Based Search by neo4j. Information Charts - The Force of Diagram Based Search. 1) Information charts are diagrams that are advanced with information after some time, bringing about diagrams that catch more detail and setting about genuine elements and their connections.

What is the knowledge graph in Gen AI?

Information Chart. Change information from numerous sources into a chart based model of elements, their qualities, and how they relate. Constantly advance the chart by adding new information to uncover designs and secret connections.

Can LLMs generate knowledge graphs?

With this three-step approach, anybody can fabricate information chart utilizing LLMs. furthermore, proficiently break down enormous corpora of unstructured information. Whether working with reports, site pages, or different types of text, we can utilize mechanize the development of information diagrams and find new experiences without any problem.

What are the properties of knowledge graphs?

An information diagram contains three fundamental components: Substances, which address the information of the association or space region. Connections, which show how the information substances collaborate with or connect with one another. Connections give setting to the information.

Related Posts: